In 2019, Dr. Jinho Choi, associate professor of computer science at Emory University, noticed more of his students were struggling with mental health. One day that year, a student confided in Choi that he was suicidal and had been receiving psychiatric treatment. The student said he felt lost and alone. Choi worried about what else he could do and noticed something else.

“I tried to help my students, but I don’t believe they tell me everything — and for good reason,” says Choi. “I’m not accessible all the time. And as much as I want to be close to them, I am an instructor. Yet I saw that some students deep in depression or anxiety sometimes did not want to talk to a human. But they were okay talking to a computer.”

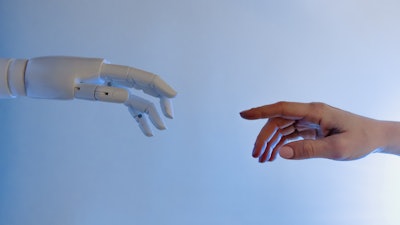

Choi studies natural language processing, which involves teaching robots to talk and text like humans. When he heard some of his students who grappled with mental illnesses were readily turning to computers, seeing them as non-judgmental and less anxiety-inducing, he wondered if an artificial intelligence (AI) enabled chatbot could help them.

With computer science graduate students, Choi built an AI-enabled bot called Emora, which can hold nuanced, human-like conversations with people. Emora’s team last year won first place in Amazon’s Alexa Prize Socialbot Grand Challenge, a national competition among university students to build chatbots. The bot is not yet sophisticated enough to be used as mental health support for students, though Choi hopes it will get there. The robot’s slogan, after all, is “Emora cares for you.”

“We want people to know it’s not just a bot trying to talk to you, but that the people developing this technology care about you,” says Choi. “We tried to use tech as a way to get involved in people’s lives in some way. We don’t see any of their personal information, but we can still program the bot in a way that can help.”

Providing additional support

A handful of psychological AI-enabled chatbots are already on the market, though they are designed to provide additional support, not replace therapists. Clinical psychologists and engineers built such chatbots as Woebot and Tess to coach users through tough times over responsive texts informed by evidence-based therapies.

As higher education tackles a worsening student mental health crisis on slim institutional budgets, studies are looking at the potential benefits of this new, affordable technology among college students.

Dr. Jinho Choi (center) with graduate students who helped build the chatbot Emora.

Dr. Jinho Choi (center) with graduate students who helped build the chatbot Emora.

Recent studies, such as one that Bunge led, found reduced anxiety symptoms among college students who used AI chatbots. The pandemic brought to the fore what some experts call a twin epidemic of mental health struggles for college students. Conducted by the Healthy Minds research group, a national survey of college students in 2020 found that almost 40% experienced depression. One in three students reported that they have had anxiety. The Centers for Disease Control and Prevention also found that one in four people aged 18 to 24 seriously considered suicide in June 2020. But providing mental health care to students can be expensive, so colleges have been turning to more affordable telehealth solutions to meet demand.

Bunge says these chatbots arrive when mental health care demand outstrips supply on campuses. Many universities have reported long wait lists for students seeking help as the pandemic catapulted telehealth requests to meet with counselors. These needs are especially acute at under-resourced institutions and communities. Nearly half of community college students reported at least one mental health condition in a national survey by The Hope Lab and Healthy Minds conducted a few years ago.

“If you only have therapists, you will reach only about 10% of the people suffering with mental health challenges,” says Bunge. “If you have therapists and chatbots, you may reach everyone. And if you have therapists and chatbots working together to help someone, you will likely have better outcomes."

Many questions remain

Still, some point to ethical and technical challenges of using AI chatbots for mental health support. Questions abound: Will the product design meet the needs of underrepresented students? Will chatbots be mandatory reporters? How will they handle student privacy?

Experts remain unsure if chatbots could be considered mandatory reporters. This would mean that if students confided in a bot about a sexual assault or harassment experience, the bot would be legally obligated to report that student to the college to investigate the assault. Most universities would likely pay a third-party company for the bot service rather than build an in-house option. So, could a third-party make that call on disclosing student details? And is that the right avenue to field serious student concerns?

Researchers are still thinking through such chatbot issues, which ultimately touch on bigger questions about higher education’s use of AI and its guardrails, or lack thereof.

“When you outsource this, then you can’t guarantee privacy with the student,” says Robert. “In many cases, companies will cut you a sweet deal with the university to do it almost for free. Why is that? Because they want the data. This is the biggest problem that people don’t talk about. And it’s not clear to me that universities know how big of an issue this is. A company could say we won’t identify the individual, but a lot of research can show you that you can often reverse-engineer the data to get to the individual.”

Dr. Camille Crittenden, executive director of the Center for Information Technology Research in the Interest of Society (CITRIS) and the Banatao Institute at the University of California, Berkeley, says there is a tremendous amount of opportunity with the chatbot technology, even amid questions.

“Some students won’t want to interact with a chatbot ever, which is totally fine, but I think that it could be really helpful,” she says, adding that it’s important “to make sure that students don’t feel like they’re surveilled” and to make sure attention is given to “the amount of agency students have to opt out."

Crittenden notes most people tend to scroll through a product’s terms and conditions without reading, then click accept. She says to address privacy concerns, students should be told more clearly how their chatbot information will be used.

“Eventually, this can be a real boon to researchers looking at the progression of student mental health,” she said. “It can offer opportunities to get more granular about how certain populations are doing. Like if students in this dorm or that major report greater distress. Or if incidents like wildfires correlate with peaks of anxiety. It can send up flares of evidence of acute distress, almost like an early warning system.”

But Robert raised another question about the ethics of this technology.

“Some people will argue that it’s unethical to have these students developing any kind of trusting relationship with technology,” he says. “People interacting with tech like it’s a caring person can be hugely problematic. Because it’s a thing. It doesn’t care about you. What are we doing in designing tech that builds strong bonds with people by pretending that it cares?”

Potential for 'human-like' connection

The University of California (UC) system recently discussed the need to inform students of their privacy protections and data use with AI chatbots in an October report called “Responsible Artificial Intelligence.” The report details UC-wide guidelines on the ethical use of AI across ten campuses. The report stands as one of the first of its kind in higher education, breaking down AI’s use on campuses into four parts: health, human resources, policing and student experience.

“Those are all areas where we’re seeing more uptake of AI-enabled tools,” says Dr. Brandie Nonnecke, founding director of the CITRIS Policy Lab. Nonnecke was one of the report’s three co-chairs. “As our campuses turn more toward these automated tools, it’s important for them to make sure they have the guardrails in place before widespread adoption.”

Still, for many facing urgent mental health needs of students, the findings that bots can help some people feel better are too promising to ignore. Bunge says more studies are needed, but part of what people seem to get out of the bots is knowledge.

“We sometimes talk about chatbots as interactive self-help,” says Bunge. “You can go to a therapist, read a book about mental health and in the middle is the chatbot. Because the bot is interactive, it helps with the learning process. If I lecture at you for a half hour about what depression is, it won’t be the same as if we have a dialogue about it.”

Then there’s another powerful aspect of chatbots: People tend to anthropomorphize them, which helps them feel better.

“Studies have shown people bonding with a bot to cope with social isolation during COVID,” says Bunge. “People told the bot things like, ‘I like you because I can trust you. You don’t judge me. I never told this to someone else.’ They were attributing human characteristics to it. That was really impressive.”

Bunge says he and fellow researchers interact with a chatbot every day to learn about the technology for themselves. He found himself experiencing emotions while messaging the bot.

Dr. Adam Miner, clinical assistant professor in psychiatry and behavioral sciences at Stanford University and a licensed psychologist, says, “The big part about chatbots is they talk like people do, and that triggers a social reaction in the person.”

“There’s a lot of research showing that strangely we follow social rules with robots,” Miner continues. “We can be polite. We apologize to Alexa. And that’s an opportunity for a bot’s success or failure at interpersonal understanding and empathy.”

While Choi wants people to know the people behind the robot care, even if the robot itself cannot, Robert holds a more in-between view.

“The ironic thing is the more people think of the chatbot as a person, the more effective it’s likely to be,” he says. "On the one hand, that’s good because they get a lot out of the technology. But on the other hand, it’s not a person who cares about you. And for students, what happens when you lose access to the technology? Once you graduate or are no longer enrolled? Then what?”

Chatbot technology is still in early stages of development. For instance, bots are often confused by typos or unable to understand certain nuances of diction that people use. But Bunge stresses that such limitations are temporary. He even expects voice-commanded chatbots in the future, which would likely better detect mood.

“One other important limitation, though, is if someone is in a crisis, the chatbot may not be able to proceed correctly at that very moment," says Bunge. "But that limitation also goes to a real therapist. If my client is in a crisis right now, I have no idea.”

One potential problem is if students tell the chatbot about a crisis, such as that they were raped or are thinking of suicide, but the chatbot doesn’t recognize that crisis appropriately.

“The worry is that would cause someone to retreat,” says Miner. “But on the flip side, if you have a chatbot that recognizes the problem, then that could be more supportive to someone. If you’re a college student, you may not want to tell your roommate about a crisis. Yet a chatbot that is carefully designed could point you to resources in a non-judgmental way.”

Equity concerns in application

Yet another question that AI chatbot researchers and psychologists raise is whether the technology would equally help marginalized students or populations that a chatbot may have overlooked in its design and implementation.

“One thing we haven’t done a good job of understanding yet is how different communities would expect a chatbot to be responding,” says Miner. “You could imagine chatbots doing a really good job with populations that have been marginalized, but if you don’t have that work on different groups’ needs upfront in the design phase, that’s a problem.”

Miner says listening to the lived experiences of different communities is critical. For example, queer communities could be reluctant to disclose a need for mental health resources, whether talking to a counselor or a chatbot for additional support, because previous iterations of service pathologized same-sex relationships. Similarly, would communities of color face language discrimination if the programmers and researchers working on the technology didn’t include people from these groups? The chatbot’s language would need to be sensitive to groups with histories and continuing realities of healthcare discrimination.

“One of the big problems with chatbots is the same problem we have with all technology, which is that it’s biased in that it often doesn’t have minorities, even women, in the design and testing,” says Robert, who has researched AI and bias in robots. “So, it’s leaning towards one group of people. If they tested with a large group of White males, that may not transfer over to females or African Americans and the like.”

Robert co-authored a 2020 article about how chatbots could help bridge health disparities in Black communities if designed and implemented inclusively. As Robert and his colleagues discussed in the article, the coronavirus pandemic kickstarted greater adoption of telehealth but also drew to the fore deep healthcare inequities.

“Companies are pushing out technology a lot of the time without being clear how they are addressing different cultures, different needs,” says Robert. "You can’t treat everyone with one broad brush.”

He points out that lesser-resourced campuses are likely to be most drawn to affordable chatbots, but those campuses also educate mostly underrepresented students, namely students of color and low-income students. And these are the very populations that Robert is concerned may be overlooked in the chatbot designing process.

“Maybe the chatbot, for example, assumes that the student has access to a primary care doctor or that you have a positive relationship with your parents to talk to them as well,” says Robert. “Then there’s the language. What’s considered to be a caring and warm tone may be different by culture. And another issue is mistrust.”

Robert notes historical mistrust of medical care within Black communities due to years of discrimination. He also says it remains unclear how people with communication limiting disabilities would interact with a chatbot.

“If the mental health situation is so bad, it’s not exactly clear to me that the solution is going to the chatbot,” says Robert. “We get into problems when people try to overextend the use of technology when it’s not quite ready. To me, that’s what this is. It’s like automated vehicles. It works great on the highway, but not downtown. To me, that’s like a chatbot. I’m not sure we know how well this will go.”

Robert says that these issues around AI products in higher education and society at large will only become more pressing in the next decade or so.

“The whole difference between sci-fi and reality is beginning to shorten, and we haven’t really thought about these complexities,” says Robert. “But it’s tough to go back and fix the problem after it has already occurred. It’s like we’re all moving along this path at lightning speed, and it’s hard for people to look up and see what’s really going on.”

This article originally appeared in the November 25, 2021 edition of Diverse. Read it here.